This week I brought my terminal renderer to the next level by performing text rendering on the GPU.

This week I brought my terminal renderer to the next level by performing text rendering on the GPU.

I first messed around with rendering images to the terminal with Braille characters in like 2022 I think? I wrote a simple CLI tool that applied a threshold to an input image and output it as Braille characters in the terminal. Here's a recording I took back when I did it.

0

3

1

4

2

5

6

7

0x2800 (⠀) and 0x28FF (⣿). In other words, every

character

within the block can be represented by changing the value of a single byte.

The lowest 6 bits of the pattern map on to a 6-dot braille pattern. However, due to historical reasons the 8-dot values were tacked on after the fact, which adds a slightly annoying mapping to the conversion process. Either way, it's a lot easier than it could be to just read a pixel value, check its brightness, and then use a bitwise operation to set/clear a dot.

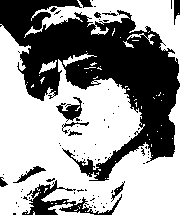

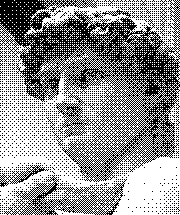

Comparing the brightnes of a pixel against a constant threshold is a fine way to display black and white images, but it's far from ideal and often results in the loss of a lot of detail from the original image.

By using ordered dithering, we can preserve much more of the subtleties of the original image. While not the "truest" version of dithering possible, ordered dithering (and Bayer dithering in particular) provides a few advantages that make it very well suited to realtime computer graphics:

My first attempt at realtime terminal graphics with ordered dithering (I put a video up at the time) ran entirely on the CPU. I pre-calculated the threshold map at the beginning of execution and ran each frame through a sequential function to dither it and convert it to Braille characters.

To be honest, I never noticed any significant performance issues doing this, as you can imagine the image size required to fill a terminal screen is signficantly smaller than a normal window. However, I knew I could easily perform the dithering on the GPU as a post-processing effect, so I eventually wrote a shader to do that. In combination with another effect I used to add outlines to objects, I was able to significantly improve the visual fidelity of the experience. A good example of where the renderer was at until like a week ago can be seen in this video.

Until now I hadn't really considered moving the text conversion to the GPU. I mean, GPU is for graphics, right? I just copied the entire framebuffer back onto the CPU after dithering and used the same sequential conversion algorithm. Then I had an idea that would drastically reduce the amount of copying necessary.

What if, instead of extracting and copying the framebuffer every single frame, we "rendered" the text on the GPU and read that back instead? Assuming each pixel in a texture is 32 bits (RGBA8), and knowing that each braille character is a block of 8 pixels, could we not theoretically shave off at least 7/8 of the bytes copied?

As it turns out, it's remarkably easy to do. I'm using the Bevy engine, and hooking in a compute node to my existing post-processing render pipeline worked right out of the box. I allocated a storage buffer large enough to hold the necessary amount of characters, read it back each frame, and dumped the contents into the terminal.

I used UTF-32 encoding on the storage buffer because I knew I could easily convert a "wide string" into UTF-8 before printing it, and 32 bits provides a consistent space to fill for each workgroup in the shader versus a variable-length encoding like UTF-8. Here's a video of the new renderer working. Although now that I think about it, I could probably switch to using UTF-16 since all the Braille characters could be represented in 2 bytes, and that would be half the size of the UTF-32 text, which is half empty bytes anyways.

Okay so I went and tried that but remembered that shaders only accept 32-bit primitive types, so it doesn't matter anyways. This little side quest has been a part of my broader efforts to revive a project I spent a lot of time on. I'm taking the opportunity to really dig in and rework some of the stuff I'm not totally happy with. So there might be quite a few of this kind of post in the near future. Stay tuned.